i3

Insights into incitement

A research platform for detecting incitement across various sources using Artificial Intelligence.

Methodology

Methodology and Tcs and Cs for the MMA Incitement Detection Tool

Overview

Insights into Incitement or i3, has been developed to help detect and track degrees of incitement of violence on social media and online. The objective of I3 is to develop an intelligent system that analyses text data such as social media posts, articles, political party commentaries, and public complaints to assess and rank the level of potential incitement to harm.

The system uses a custom classifier and sentiment analysis to rank each piece of analysed text on a RAG scale (Red, Amber, Green) by severity of potential incitement.

Background

The potential for violence within South Africa in the lead up to its national and provincial elections is high. There is precedent – in July 2021 the country saw an explosion of violence in the KwaZulu Natal province, resulting in the death of over 300 people and billions of rand in damage to infrastructure. This violence was partly incited: an inquiry by the South African Human Rights Commission highlighted the role that users on social media and the platforms played in instigating and inciting the violence. At particular risk are minorities, fueled by xeno- and Afrophobia as well as vulnerable groups, including women.

Despite the likelihood for incitement online, and despite these factors being well known by key stakeholders, from the Electoral Commission (IEC) to the South African National Editors Forum, there remains a gap in identifying, tracking and analysing the nature of incitement online in South Africa. This means that incitement is not currently accurately reported or assessed anywhere, in turn making it difficult to address. The I3 tool, uses developed indicators to identify instances of incitement across social media to give insight into levels of incitement online. The tool builds on MMA’s existing work around mis/disinformation and its Real411.org project that provides a platform for the public to report digital harms.

Definition of Incitement

In developing the I3 tool an expert group worked on challenges around elements and indicators of incitement. (A separate discussion document will be produced focusing on the specific issues). For practical purposes the following definition was adopted: Incitement, for the purposes of this tool, is defined as any text-based communication that encourages, glorifies, or directly calls for acts of violence.

Classification

The classifier evaluates text content to determine the severity of incitement using a Red-Amber-Green (RAG) scale. Red indicates the highest risk of incitement, Amber denotes a moderate level, and Green signifies low or no incitement. Legal professionals from Media Monitoring Africa (MMA) guided the collection and curation of training data to capture nuanced incitement behaviours across different contexts.

Team Involved

- Lawyers: Guided data collection and annotated training data according to legal standards.

- Linguists: Helped refine language features, ensuring the model correctly interpreted localised incitement expressions.

- Data Scientists & ML Engineers: Developed and trained the model using AWS tools, implementing pre-processing and performance analysis techniques.

- Engineers: Managed AWS architecture, developed the frontend dashboard, and ensured end-to-end deployment.

- Project Managers: Coordinated the development process across teams and ensured regular progress updates.

Technical Approach and Methodology Overview

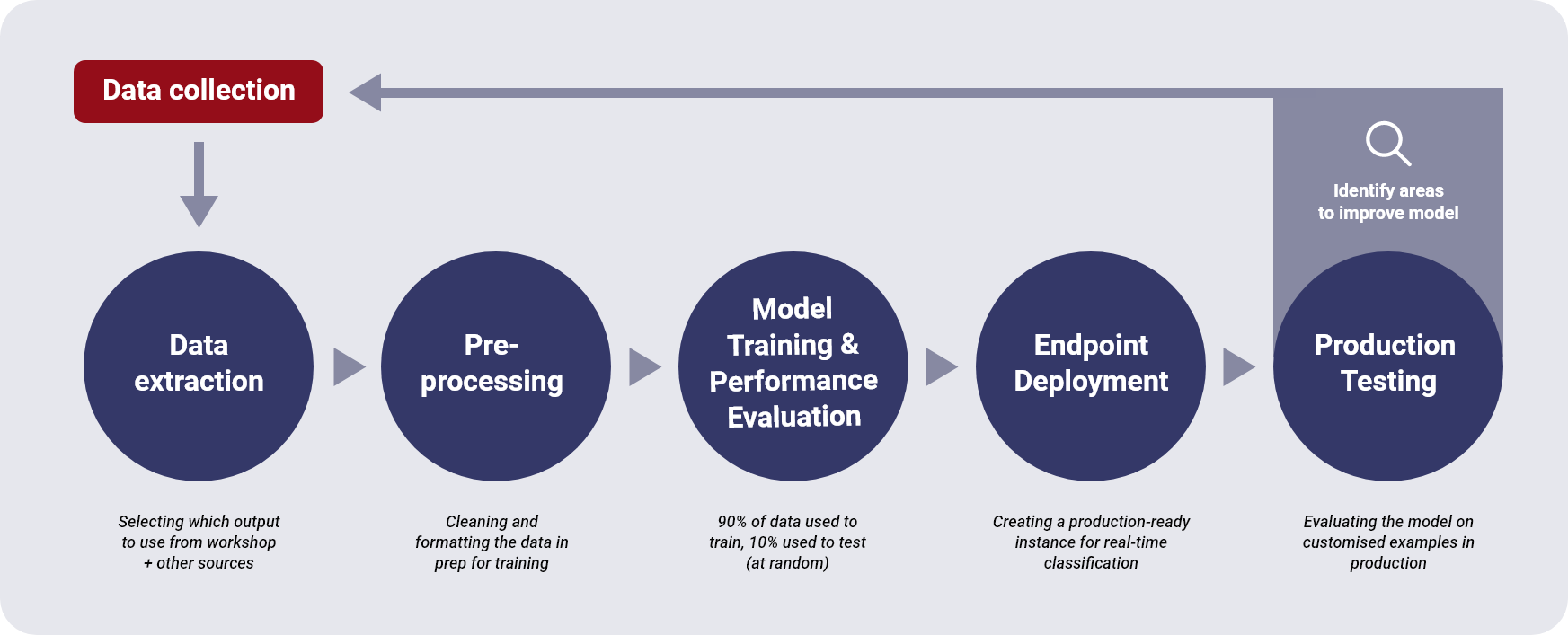

An MLOps methodology has been followed in the development of the AI model that was used to train the model. It involves a series of iterations to get to the desired result.

This methodology guides further development while accounting for these limitations and ensuring future enhancements improve the model's detection capabilities and dashboard usability.

- Data Collection & Annotation: Lawyers and other legal professionals collected diverse text data indicative of incitement. They labelled the data into categories for supervised learning.

- Pre-Processing: Engineers cleaned and formatted the raw text, removing inconsistencies to ensure compatibility with the model.

- Model Training & Evaluation: Data scientists trained a custom incitement classifier on Amazon Comprehend, using an ML Ops methodology. Performance was iteratively evaluated using 90% of the data for training and 10% for testing. Results were categorised on a RAG scale.

- Dashboard Development: Engineers created a Next.js dashboard displaying results and other analytics, making it filterable and searchable.

- Deployment & Monitoring: The AWS-hosted model was batch-processed for large data sets, with access monitoring and cloud resource usage tracking.

Caveats & Limitations

- Data Quality: Model accuracy is reliant on training data, which might be biased or incomplete. More diverse data is needed to reduce the risk of overfitting and improve performance.

- Model Interpretation: False positives or negatives can occur, as nuanced text interpretation is challenging.

- Work in Progress: The platform remains a work in progress, and the current version may lack some features planned for future iterations.

- Cost Constraints: Efficient model deployment is crucial to managing costs associated with AWS cloud usage.

Liability

The user accepts complete liability in utilising and interpreting the results of the i3 tool. MMA or its affiliate companies will not be liable for any events resulting in loss or damage, and no negligence or failure to disclose or any factors included in the tool will be the liability of MMA.

Get in Touch

Whether you find value in our tool, have questions, or are interested in collaborating, we’d love to hear from you.